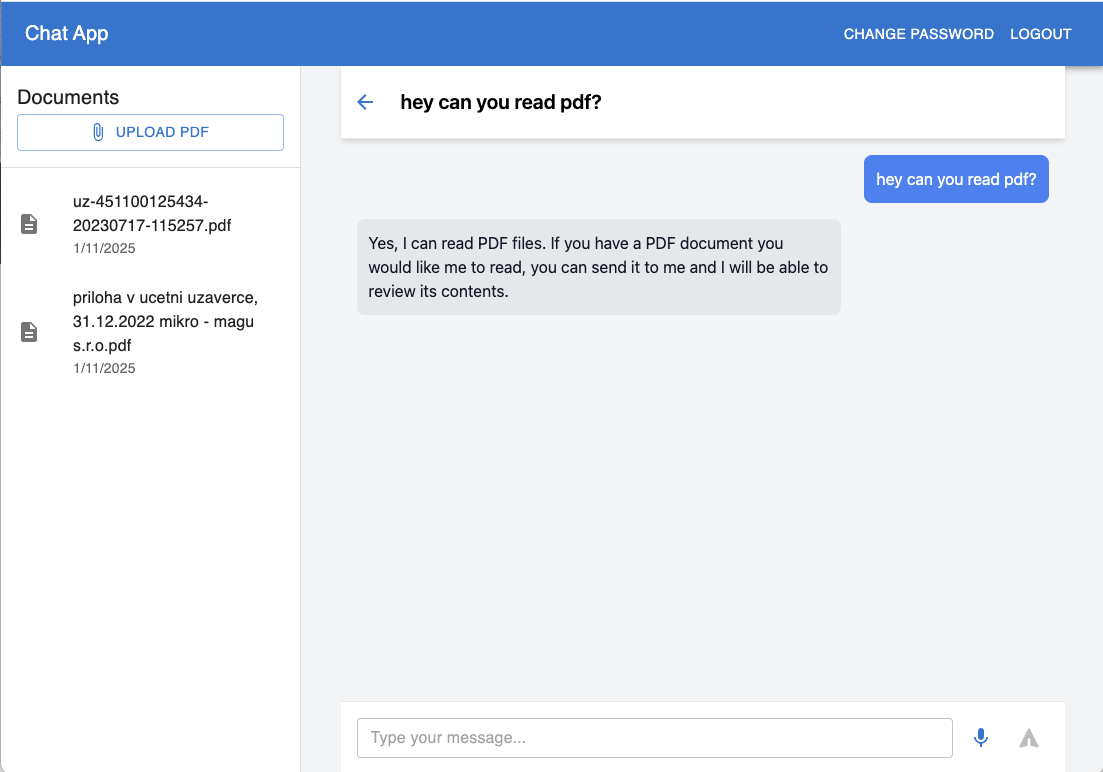

Building a Modern AI Chat Interface: React + TypeScript Implementation

Built a clean React frontend that connects to my previous .NET backend project. The UI lets you chat with AI models (ChatGPT and Claude) while handling voice input and PDF document processing.

Key Features

- Voice-to-text conversion for natural conversations

- PDF document upload and analysis

- Real-time AI chat with streaming responses

- Clean Material-UI + Tailwind CSS interface

- Chat history management

Simple Setup

- Clone repo

npm install- Configure backend URL in

.env npm start

The UI is minimal but functional - single chat window with document sidebar, voice input button, and message history. Perfect for applications needing AI chat with document analysis capabilities.

Check out the code to see how it all fits together!

https://github.com/ivanjurina/chatgpt-claude-react-app

ChatGPT & Claude Integration for .NET: With Voice & PDF Processing

I've built a .NET Web API that streamlines integration with ChatGPT and Claude, featuring voice-to-text conversion and PDF data extraction out of the box. It's designed for production use and handles all the complex pieces - from AI streaming responses to document processing.

Core Features

- Voice & Documents: Convert speech to text and extract data from PDFs

- AI Integration: Real-time streaming with ChatGPT and Claude 3

- Security: JWT authentication, BCrypt hashing

- Data: EF Core with SQLite, repository pattern

- API Docs: Full Swagger documentation

Quick Start

bashCopy# Clone and setup

git clone https://github.com/ivanjurina/chatgpt-claude-dotnet-webapi.git

cd chatgpt-claude-dotnet-webapi

# Add your API keys to appsettings.json, then:

dotnet ef database update

dotnet run

Architecture

Built with clean architecture principles, proper error handling, and efficient async patterns throughout. The project provides a solid foundation for building production-ready AI applications.

Use Cases

Ideal for:

- Voice-enabled AI interfaces

- Document processing systems

- Real-time chat applications

- Enterprise applications needing multiple AI models

Check out the repository for more details and setup instructions.

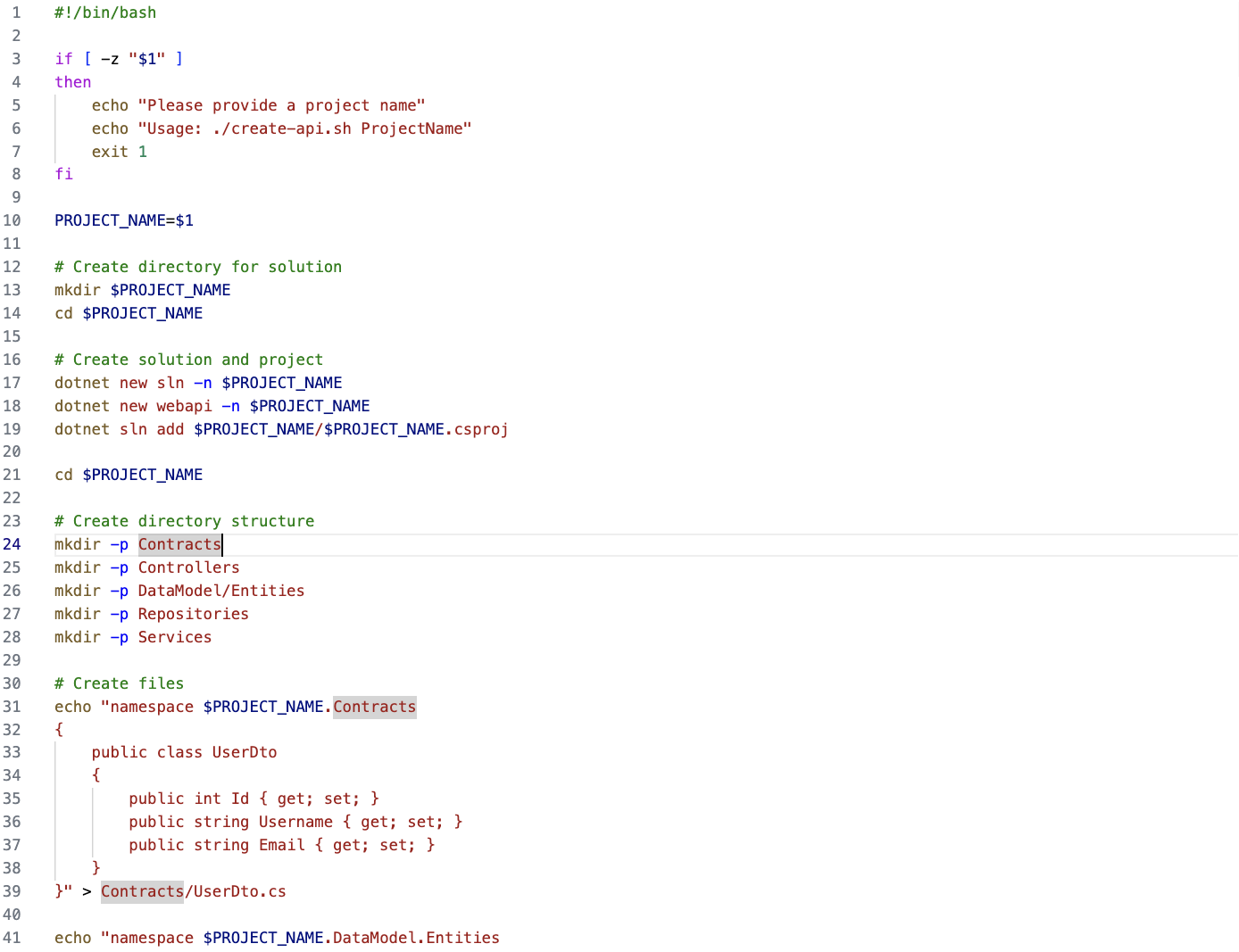

Generating .NET Web API Microservices from Template

Inspired by a colleague's efficient approach to standardizing microservices, I created a bash script template generator for .NET Web API projects. The script creates a standardized architecture with controllers, services, repositories, and data models, setting up Entity Framework Core and Swagger UI in the process.

With a single command:

bashCopy./create-api.sh ProjectName

You get a complete microservice structure following clean architecture principles. The template includes a layered design, basic User entity implementation, and an in-memory database for rapid development.

This approach ensures all microservices follow the same structure, making maintenance and cross-service development more straightforward for team members.

Find the template and documentation on GitHub: dotnet-webapi-template-script

Or here:

#!/bin/bash

if [ -z "$1" ]

then

echo "Please provide a project name"

echo "Usage: ./create-api.sh ProjectName"

exit 1

fi

PROJECT_NAME=$1

# Create directory for solution

mkdir $PROJECT_NAME

cd $PROJECT_NAME

# Create solution and project

dotnet new sln -n $PROJECT_NAME

dotnet new webapi -n $PROJECT_NAME

dotnet sln add $PROJECT_NAME/$PROJECT_NAME.csproj

cd $PROJECT_NAME

# Create directory structure

mkdir -p Contracts

mkdir -p Controllers

mkdir -p DataModel/Entities

mkdir -p Repositories

mkdir -p Services

# Create files

echo "namespace $PROJECT_NAME.Contracts

{

public class UserDto

{

public int Id { get; set; }

public string Username { get; set; }

public string Email { get; set; }

}

}" > Contracts/UserDto.cs

echo "namespace $PROJECT_NAME.DataModel.Entities

{

public class User

{

public int Id { get; set; }

public string Username { get; set; }

public string Email { get; set; }

}

}" > DataModel/Entities/User.cs

echo "using Microsoft.EntityFrameworkCore;

using $PROJECT_NAME.DataModel.Entities;

namespace $PROJECT_NAME.DataModel

{

public class ApplicationDbContext : DbContext

{

public ApplicationDbContext(DbContextOptions<ApplicationDbContext> options)

: base(options)

{

Database.EnsureCreated();

}

public DbSet<User> Users { get; set; }

protected override void OnModelCreating(ModelBuilder modelBuilder)

{

modelBuilder.Entity<User>().HasKey(u => u.Id);

// Seed some data

modelBuilder.Entity<User>().HasData(

new User { Id = 1, Username = \"user1\", Email = \"user1@example.com\" },

new User { Id = 2, Username = \"user2\", Email = \"user2@example.com\" }

);

}

}

}" > DataModel/ApplicationDbContext.cs

echo "using $PROJECT_NAME.DataModel;

using $PROJECT_NAME.DataModel.Entities;

namespace $PROJECT_NAME.Repositories

{

public class UserRepository

{

private readonly ApplicationDbContext _context;

public UserRepository(ApplicationDbContext context)

{

_context = context;

}

public async Task<User> GetByIdAsync(int id)

{

return await _context.Users.FindAsync(id);

}

}

}" > Repositories/UserRepository.cs

echo "using $PROJECT_NAME.Contracts;

using $PROJECT_NAME.Repositories;

namespace $PROJECT_NAME.Services

{

public class UserService

{

private readonly UserRepository _userRepository;

public UserService(UserRepository userRepository)

{

_userRepository = userRepository;

}

public async Task<UserDto> GetUserByIdAsync(int id)

{

var user = await _userRepository.GetByIdAsync(id);

if (user == null) return null;

return new UserDto

{

Id = user.Id,

Username = user.Username,

Email = user.Email

};

}

}

}" > Services/UserService.cs

echo "using Microsoft.AspNetCore.Mvc;

using $PROJECT_NAME.Contracts;

using $PROJECT_NAME.Services;

namespace $PROJECT_NAME.Controllers

{

[ApiController]

[Route(\"[controller]\")]

public class UsersController : ControllerBase

{

private readonly UserService _userService;

public UsersController(UserService userService)

{

_userService = userService;

}

[HttpGet(\"{id}\")]

public async Task<ActionResult<UserDto>> Get(int id)

{

var user = await _userService.GetUserByIdAsync(id);

if (user == null) return NotFound();

return user;

}

}

}" > Controllers/UsersController.cs

echo "using Microsoft.EntityFrameworkCore;

using $PROJECT_NAME.DataModel;

using $PROJECT_NAME.Repositories;

using $PROJECT_NAME.Services;

var builder = WebApplication.CreateBuilder(args);

builder.Services.AddControllers();

builder.Services.AddEndpointsApiExplorer();

builder.Services.AddSwaggerGen();

builder.Services.AddDbContext<ApplicationDbContext>(options =>

options.UseInMemoryDatabase(\"$PROJECT_NAME\"));

builder.Services.AddScoped<UserRepository>();

builder.Services.AddScoped<UserService>();

var app = builder.Build();

app.UseSwagger();

app.UseSwaggerUI(c =>

{

c.SwaggerEndpoint(\"/swagger/v1/swagger.json\", \"$PROJECT_NAME API V1\");

c.RoutePrefix = string.Empty;

});

app.UseHttpsRedirection();

app.UseAuthorization();

app.MapControllers();

app.Run();" > Program.cs

# Add packages

dotnet add package Microsoft.EntityFrameworkCore.InMemory

dotnet add package Swashbuckle.AspNetCore

echo "Solution and project $PROJECT_NAME created successfully! Run with 'dotnet run' and visit https://localhost:5001/swagger"Form Autofiller for Bory Hospital

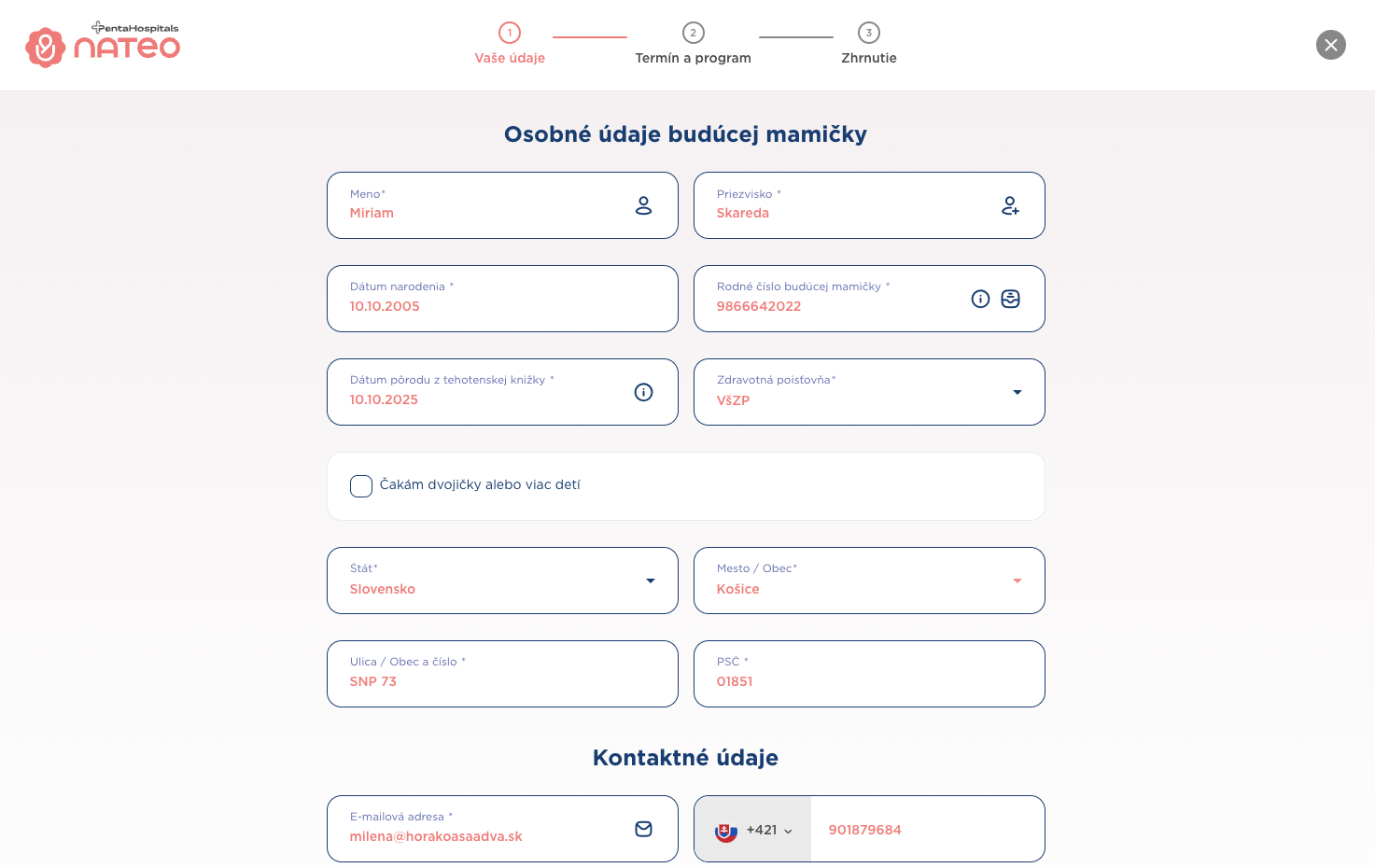

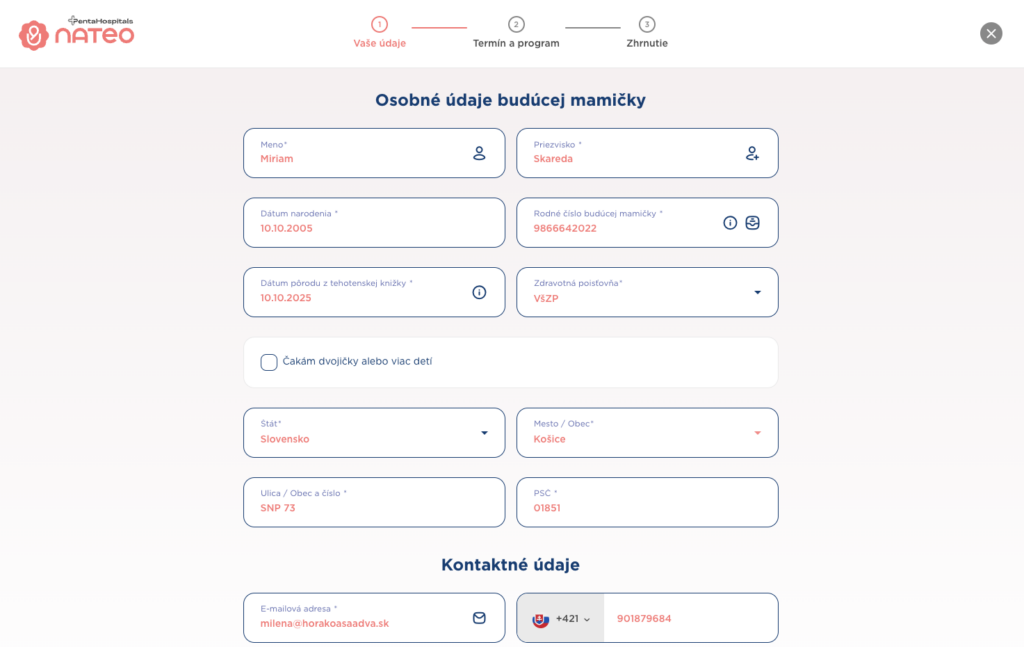

So my friend is expecting and needed to register at Bory Hospital. The tricky part is that the hospital only accepts a limited number of patients each month, so you need to be quick with the registration when spots open up. With the extension, you can fill out everything in seconds instead of rushing through all those fields manually and potentially missing out on a spot.

here's the github link https://github.com/ivanjurina/bory-registration-autofill

Bory Hospital Form Autofiller

Chrome extension that automatically fills registration form at https://rezervacie.porodnicabory.sk/register

Features

- Fills personal info

- Sets insurance provider

- Selects state and city

- Handles checkboxes

- Works with Angular Material form components

Installation

- Clone repo

- Open Chrome Extensions (chrome://extensions/)

- Enable Developer mode

- Click "Load unpacked"

- Select extension directory

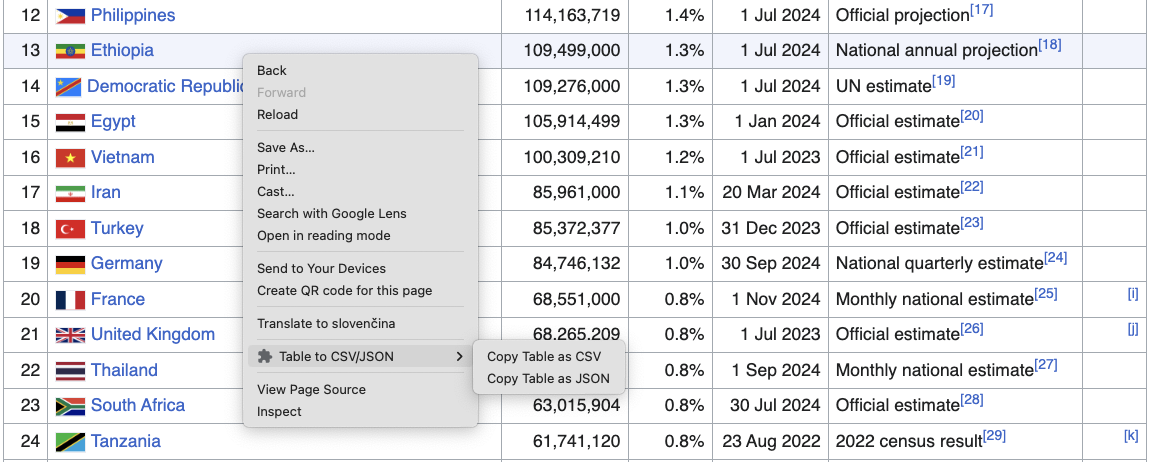

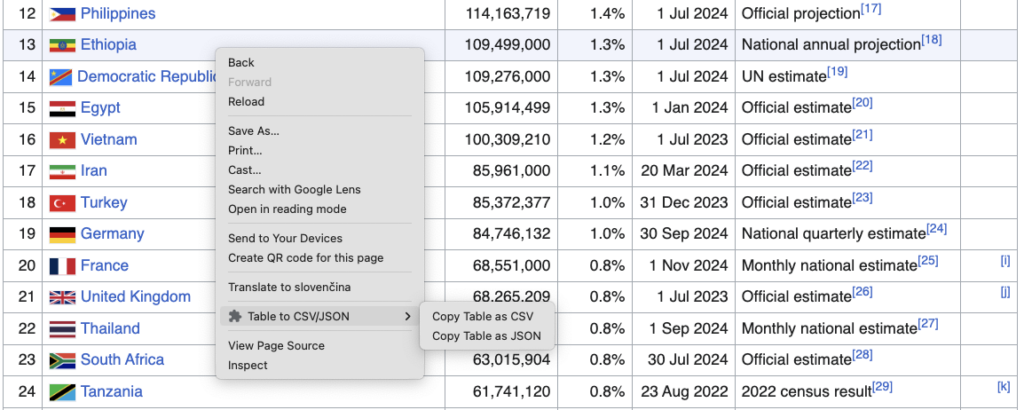

HTML Table to CSV/JSON Chrome Extension

A lightweight Chrome extension that converts HTML tables into CSV or JSON formats with a single click. Perfect for developers and data analysts who need to quickly extract tabular data from websites.

Features

- One-click table conversion

- Supports both CSV and JSON export

- Works with complex nested tables

- Preserves table structure and formatting

- Context menu integration

- Zero dependencies

Installation

- Clone repository

- Open Chrome Extensions (chrome://extensions/)

- Enable Developer Mode

- Load unpacked extension from project folder

Usage

Right-click any table on a webpage and select "Export to CSV" or "Export to JSON" from the context menu.

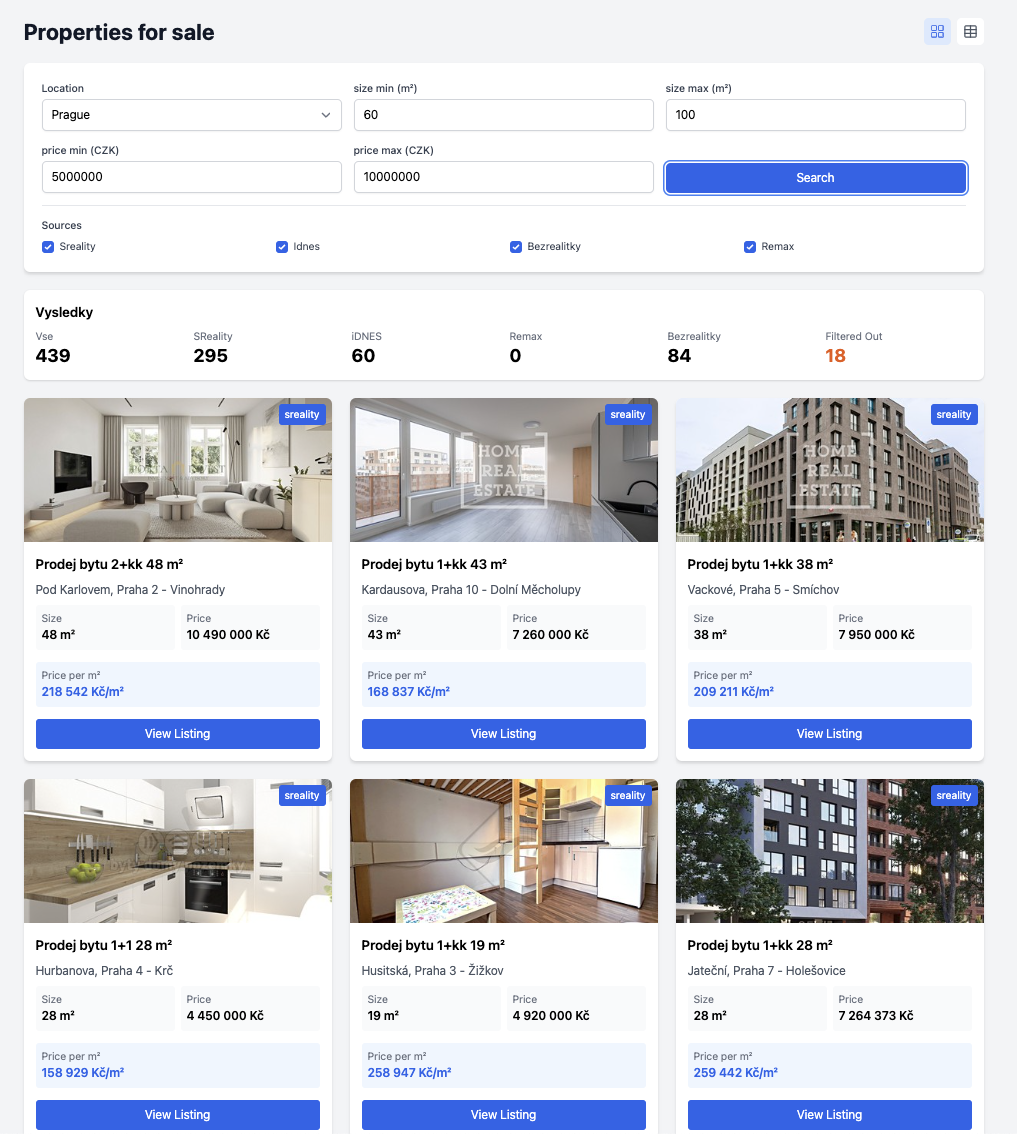

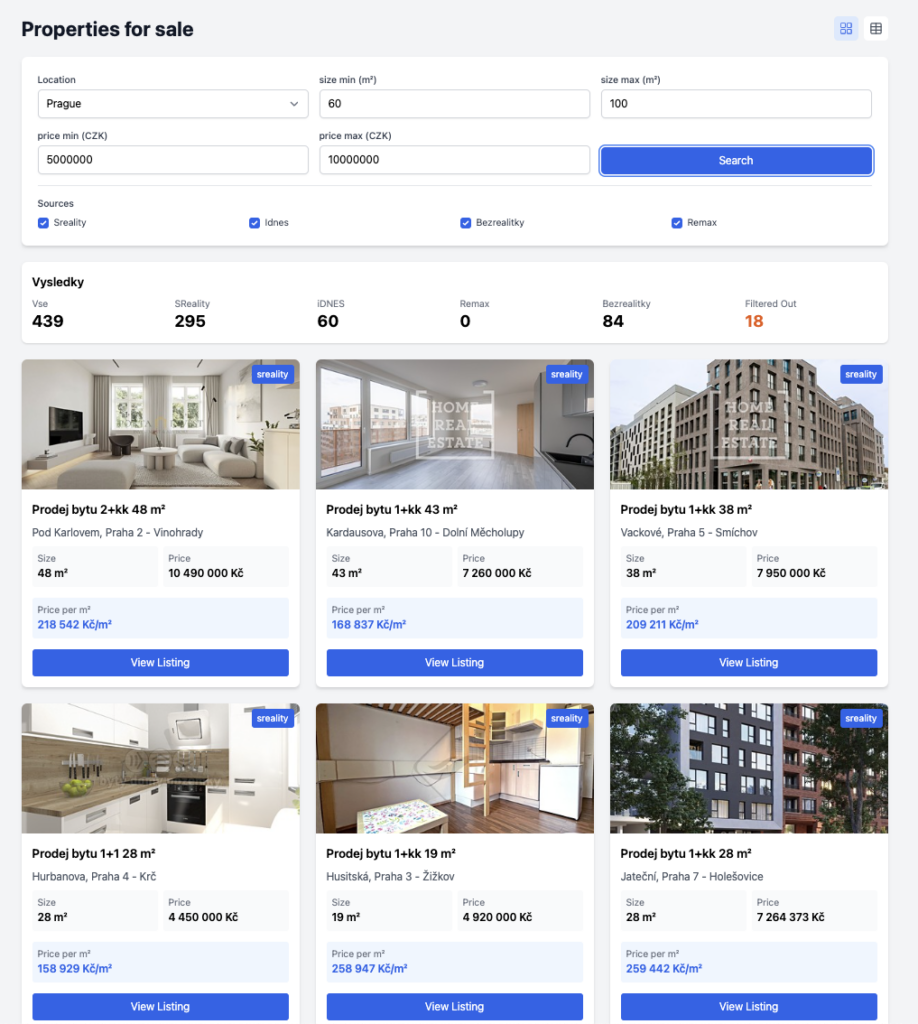

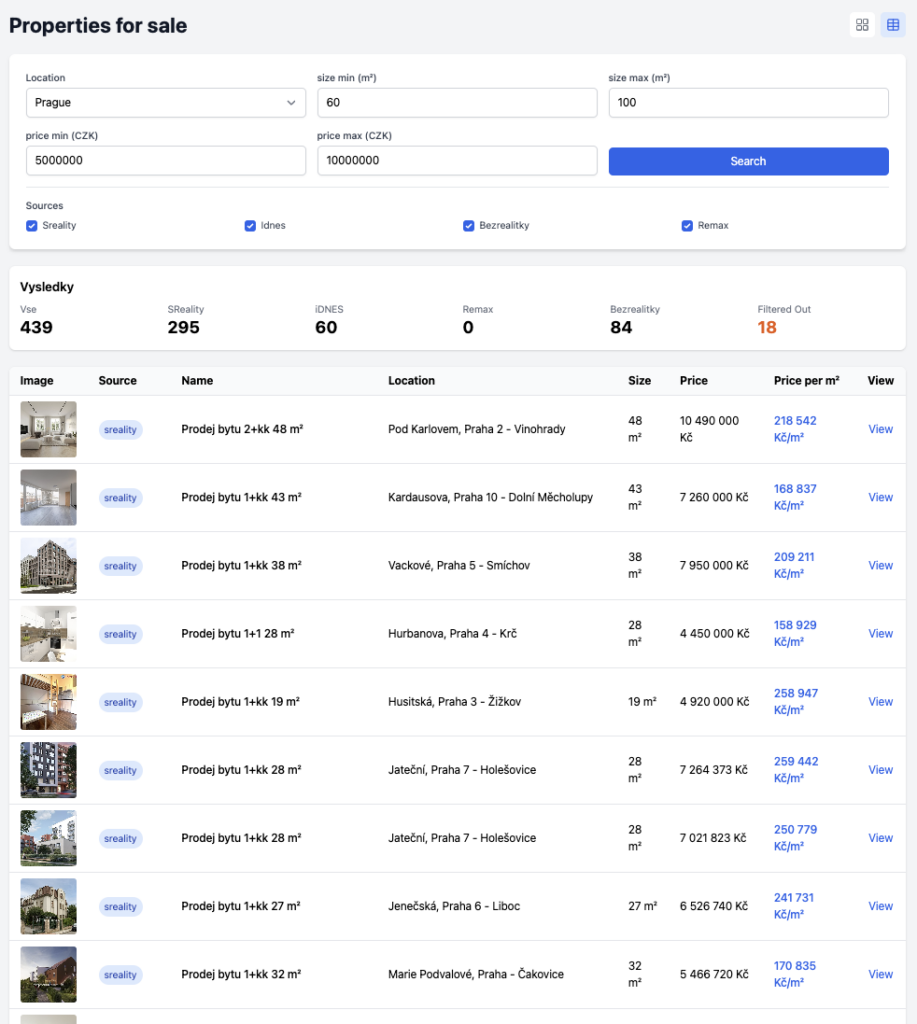

Building a Czech Property Search Aggregator

I recently built a web app that aggregates property listings from the major Czech real estate websites: iDnes, SReality, Bezrealitky, and Remax. Here's what it offers:

Key Features:

- Unified search across multiple property sites

- Filters for location, size (m²), and price range (CZK)

- Price per m² calculations

- Clean, responsive interface showing essential property details

- Direct links to original listings

Technical Stack:

- React frontend with modern UI components

- API integration with major Czech property portals

- Price normalization across different listing formats

https://github.com/ivanjurina/czech-properties-crawler-app

https://github.com/ivanjurina/czech-properties-crawler-api

Hello 2025!

My first post.

Once again.

I tried writing a blog since I can remember.

I always spent too much time thinking about what it should look like.

Not much time actually writing it.

2025 is going to be different :)

Do not fear the Cloud

The cloud is one of the modern trends that have fundamentally changed the way businesses IT operate. And while the startup environment has been quick to adapt to it, many companies, especially those in conservative industries, are still hesitant. According to a PwC survey, security concerns keep 63% of companies from moving to the cloud. This is despite the fact that leading banks, which are subject to a number of regulations and have high security requirements, have recently decided to switch. But even the largest cloud providers (Amazon, Microsoft, Google) are bucking the trend, which in addition to performance also offers comprehensive support and a whole range of services that cover the needs of most companies.

In terms of security, there are still many prejudices against the cloud, and some companies prefer to run their own servers to have the data at their headquarters and therefore physically under control. This requirement can also be met when using the cloud, the basis is always to understand the needs and concerns of the company in question - on this basis it is then possible to propose an optimal solution and minimize the risks.

Avoid human error

Company representatives who have to decide whether to move to the cloud usually come across familiar arguments - flexibility in system expansion and performance enhancement, decentralization, availability from almost any part of the world, and resilience. But why should company bosses undergo the whole demanding process when they can simply and cheaply buy additional servers as they have been used to?

Moving to the cloud requires looking to the future. The biggest advantage is that you do not have to hire new experts or administrators to take care of the servers. The cost is not only in the purchase of hardware, but also in the salaries of the staff that will have to take care of the ever-growing system. In addition, more staff naturally means more maintenance costs, more security and more room for error.

The security check is usually the transition to the cloud itself

Many managers think their system is secure when no one else can see into it. But nothing is further from the truth. Unless a company has essentially isolated its IT from the rest of the world, it's difficult to develop a secure and reliable system on your own. Rather, the opposite is true; in practice, one often encounters cumbersome applications with a complex architecture with which their creators have little familiarity. The transition to the cloud is usually the first security review that the company goes through.

The security audit helps uncover critical parts of the system. It then needs to be broken down into parts, non-functioning parts repaired and a new architecture designed. The respective company must weigh up whether it wants to move completely to the cloud or whether it wants to continue to leave the critical infrastructure on its own servers - and thus opt for the so-called hybrid cloud. In this case, normal security processes are not enough; you have to look at the system as a whole and ideally consider where the provider's responsibility begins and ends. Without clearly defined barriers, you are once again creating problems for yourself in the future.

Know your provider in advance

When moving to the cloud, choose the company you want to trust with your data in advance. With local companies, they may have an inadequate service offering, limited coverage, and non-compliance with standards like GDPR. With global players, the biggest disadvantage may be their size. In that case, you can barely reach your provider and not even think about doing an audit. A good clue is whether the provider in question has a local office and meets the required security standards, including the GDPR mentioned earlier. Also remember that it is not enough to audit the provider itself, but also the entire chain, for example, if it uses third-party software.

Last but not least, do not forget about vendor lock, i.e. absolute dependency on the provider's infrastructure or system. The cloud usually scales as your business grows and the number of tools you use grows. These are often unique and any change is then too expensive or even impossible. Therefore, it is necessary to prepare future scenarios that will help you deal with vendor lock.

The cloud is not only an infrastructure, but also a set of necessary services

Large cloud providers not only provide the infrastructure itself (IaaS - Infrastructure as a Service), but also an entire platform with a wide range of integrated tools (PaaS - Platform as a Service), such as databases, tools for data backup and system monitoring, all optimized for hardware or performance. Some providers also add their own office tools for the day-to-day operation of the business (G Suite, Office 365), but also enable rapid deployment of CRM or BI.

These are things that a normal enterprise would struggle to keep running, in terms of time and money. Another advantage of the cloud is that support and security are handled by entire teams of developers and specialists. Native backup support, for example, has such a high SLA (service-level agreement) that data loss is virtually impossible. The cloud is also highly resistant to DDoS attacks (which consist of sending a huge number of requests, often from a large number of computers, with the aim of overwhelming the server and thereby shutting down the service) - the system detects them and redirects the onslaught outside the company's system, so there are no outages.

The biggest risk is not the technology, but the employees

The biggest risk in enterprises is the human factor. In the case of the cloud, this means first and foremost mastering administrative security, which means limiting access and rights to changes according to roles on the team. The second area is managing the devices that log on to the internal network - you need to verify that the hardware in question is secure. Ideally, the company will provide its employees with their own devices for work. Otherwise, the risk must be handled with special software.

The cloud itself is fully encrypted, so no one can get the idea that someone could access your data. The providers' employees are usually ISO certified, and the system also immediately detects if someone is working with the data without authorization.

The cloud grows with the business

Every company wants to grow and expand its business. Today, this is no longer possible without the development of the IT system. The cloud grows with the company, additional power can be obtained cheaply and with a few clicks. It also overcomes the risks typical of on-premise systems, it can handle large load peaks, such as the peak load in the e-store before Christmas, or it is used by power companies to calculate network capacity. It is also very easy to expand to a new country. This is precisely why it is so popular with startups planning to expand abroad.

Global availability is also linked to the trend toward remote collaboration. According to PwC, using the cloud boosts employee performance by up to 30%, aside from the aforementioned savings on employees taking care of the infrastructure. In short, it's an unstoppable global trend that both small businesses and software giants are jumping on. Atlassian or SAP, for example, are moving away from server solutions and moving their services to the cloud, which is only a monthly expense for companies. This eliminates many worries and allows them to focus on their own business.

Introduction to ChatGPT and OpenAI

ChatGPT and OpenAI are two revolutionary technologies that are changing the world of artificial intelligence. ChatGPT is a language model developed by OpenAI that is capable of generating human-like text responses to prompts. OpenAI, on the other hand, is an artificial intelligence research lab that is focused on creating advanced AI systems that can solve complex problems.

ChatGPT is one of OpenAI's most impressive achievements to date. It is a neural network that has been trained on a massive dataset of text from the internet. This dataset includes everything from news articles to social media posts and is designed to provide ChatGPT with a broad understanding of the English language.

One of the most impressive features of ChatGPT is its ability to generate text that is difficult to distinguish from human-written content. This means that it can be used in a variety of applications, including chatbots, content creation, and customer service.

OpenAI, the organization behind ChatGPT, is a research lab that is focused on advancing artificial intelligence. Their goal is to create advanced AI systems that can solve complex problems and improve our understanding of the world around us.

In addition to ChatGPT, OpenAI has developed a range of other advanced AI systems, including GPT-3, a language model that is even more advanced than ChatGPT. They have also created advanced robotics systems, such as Dactyl, which is a robotic hand that is capable of manipulating objects with incredible dexterity.

The potential applications of these technologies are vast. They could be used to create more intelligent chatbots that are capable of handling more complex tasks, or to develop new forms of content creation that are more efficient and accurate. They could also be used to develop new tools for scientific research, or to create more advanced robotics systems that can perform complex tasks in a range of different environments.

Overall, ChatGPT and OpenAI are two of the most exciting developments in the world of artificial intelligence. They represent a major leap forward in our ability to create advanced AI systems that can solve complex problems and improve our understanding of the world around us. As these technologies continue to evolve, we can expect to see even more exciting developments in the years to come.

OpenAI's ChatGPT changing the world as we know it

ChatGPT from OpenAI, the powerful new artificial intelligence chatbot, is the talk of the town. If you want to know what's behind it, here are the basics.

What is ChatGPT?

ChatGPT is an AI tool that allows users to create unique texts. This includes answering questions, creative prompts, and creating interesting content like poems, songs, and short stories.

When did ChatGPT come out and where did it come from?

OpenAI developed ChatGPT and released it in November last year. OpenAI was founded by Elon Musk and funded in part by controversial right-wing billionaire Peter Thiel, and is led by CEO Sam Altman.

How to use ChatGPT

To get set up with OpenAI, all you need is an email address and a phone number, then you can use the chatbot. Ask it questions or give it commands - like "write me a Raymond Chandler story about the McDonald's Hamburglar" - and get creative!

How does ChatGPT work?

The program uses a large language model algorithm that is fed with huge amounts of text data so that it can respond in a realistic way using natural language processing.

Who is ChatGPT suitable for?

Anyone can use it as long as they create an OpenAI account.

Are ChatGPT's responses always correct?

No, not always! The platform is known for making things up. So if you want to rely on ChatGPT's answers for important questions, you should double-check them beforehand.

Does ChatGPT cost money?

Initially, no - but users can pay a subscription fee (known as 'ChatGTS Plus'), which gives them priority access to features and improvements, as well as allowing them to use the chatbot at peak times when it might otherwise break down due to its popularity. However, free users do not need to bother with a subscription unless they have big plans for this chatbot!

What is "Generative AI?"

Generative AI is the area of industry and technology that deals with artificial intelligence; ChatGPT is an important part of it. Other applications include art creation, video production element reproduction, human actor/voice simulation, and other knowledge economy/creative industry related tasks.